Deep Reinforecement Learning for Drone Obstacle Avoidance

Navigation

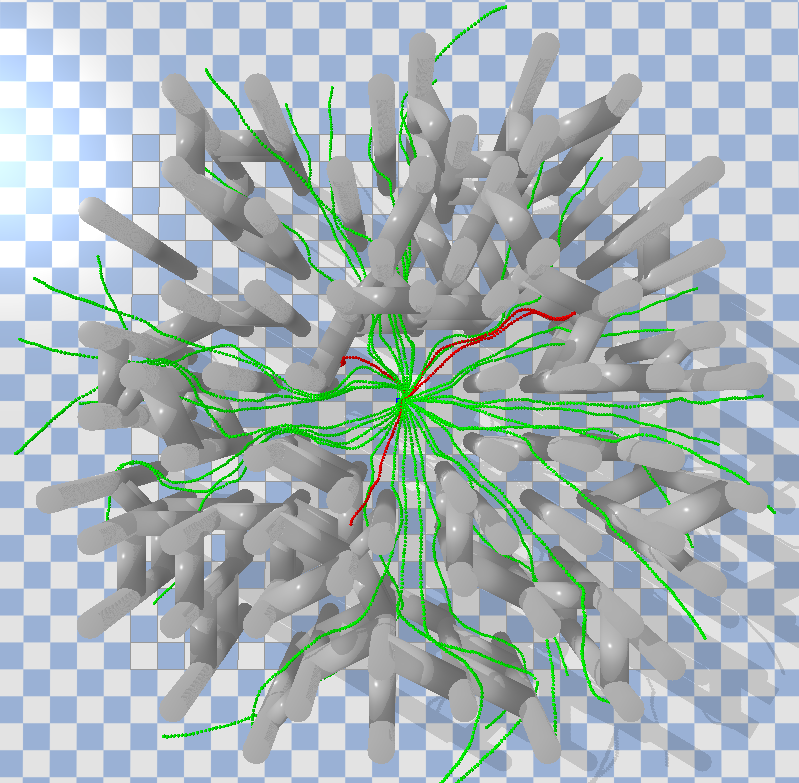

Traditional methods for UAV navigation and obstacle avoidance typically rely on a sequential, cascaded approach, which often generate point cloud maps from depth images then subsequently employed for path planning and flight control. Although they provide high interpretability and precise navigation with accurate maps, their cascaded design can lead to error accumulation. Moreover, map construction and storage demand significant computational resources, which reduces frame rates and responsiveness in dynamic environments, ultimately decreasing obstacle avoidance efficiency.

Project Overview

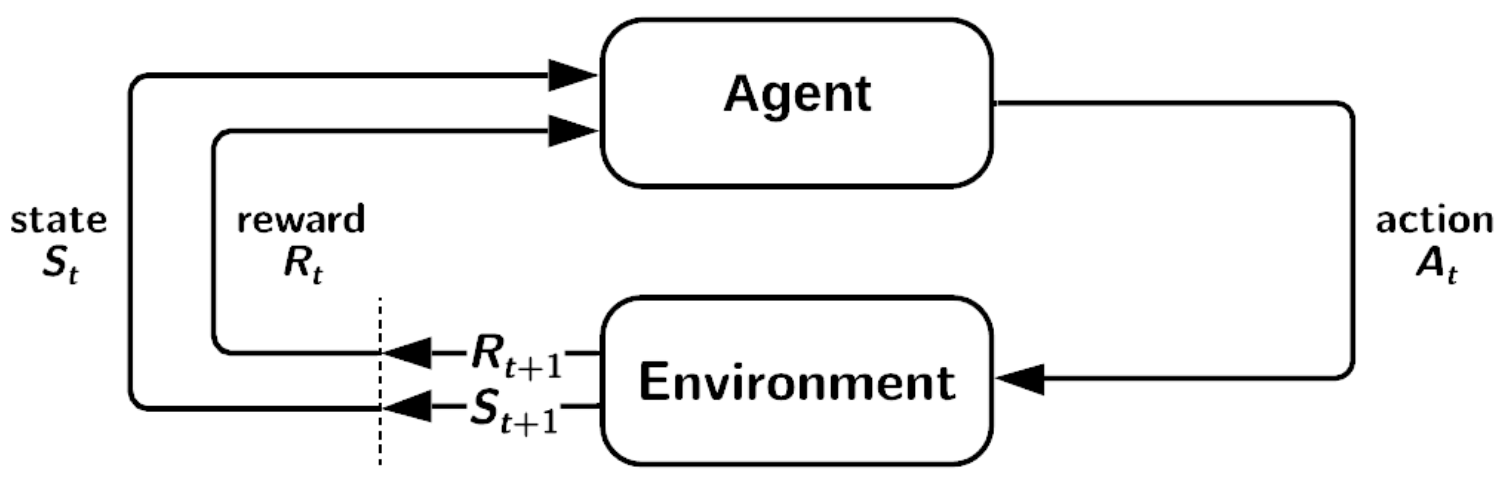

Advancements in Deep Reinforcement Learning have introduced end-to-end visual navigation frameworks. These models directly map perception tocontrol, eliminating the need for intermediate mapping and storage, reducing cumulative errors and offerring better adaptability in dynamic environment.

While traditional methods require manual tuning for different environments, DRL-based systems can generalize learned behaviors to new settings after sufficient training. This adaptability may encounter diverse and unpredictable environments during operation.

Research Questions:

• Can DRL policies learn to navigate UAVs using depth-only perception and partial observability?

• How do DRL algorithms perform under realistic noise and occlusion?

• What is the effect of temporal memory (GRU) on decision-making in obstacle-rich environments?

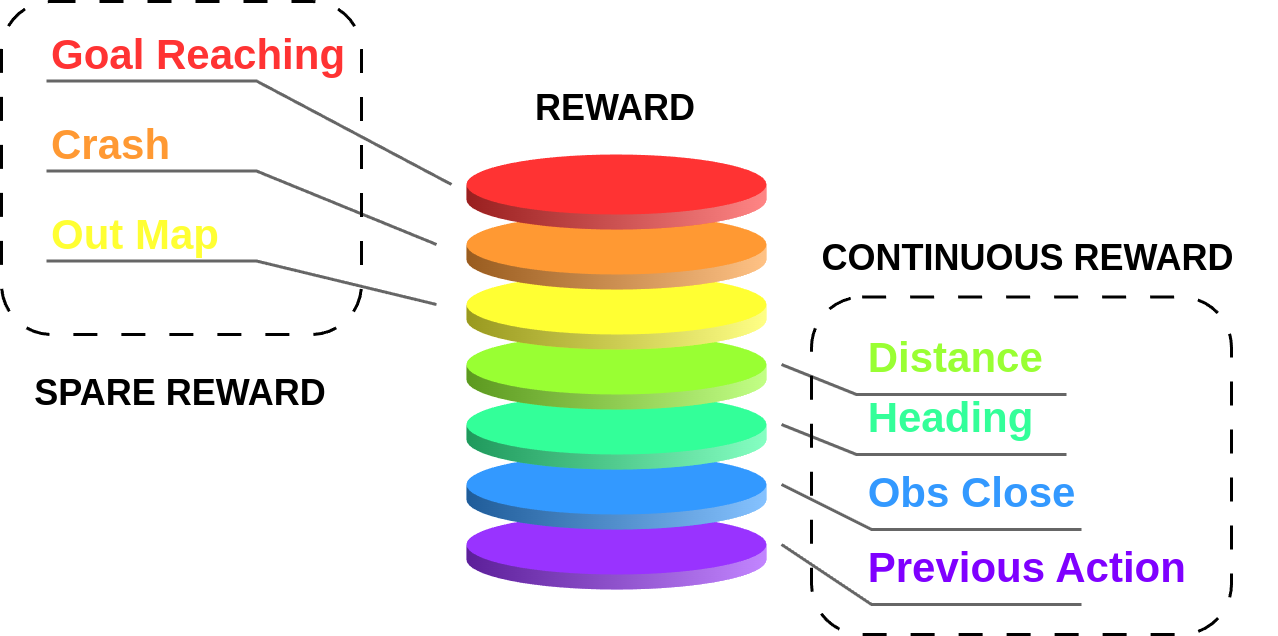

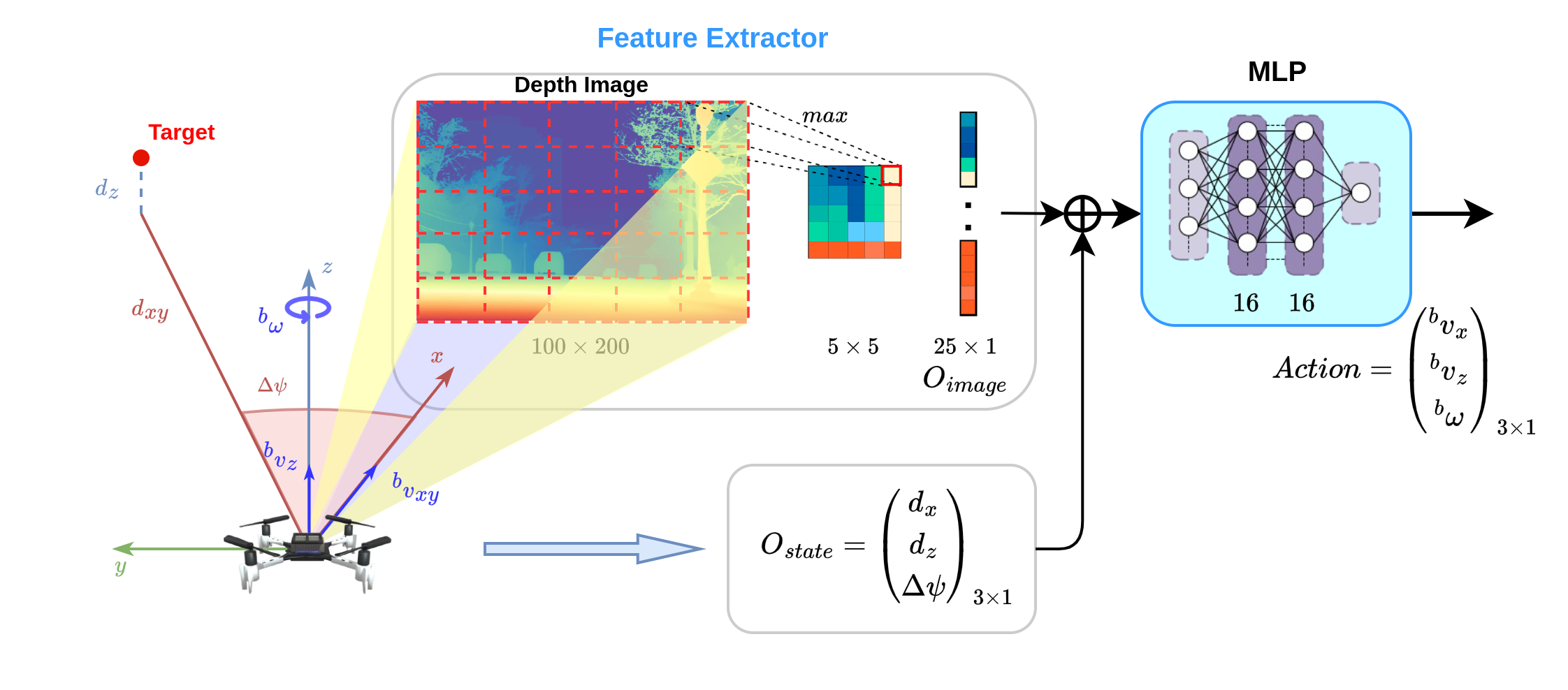

Methodology and Approach

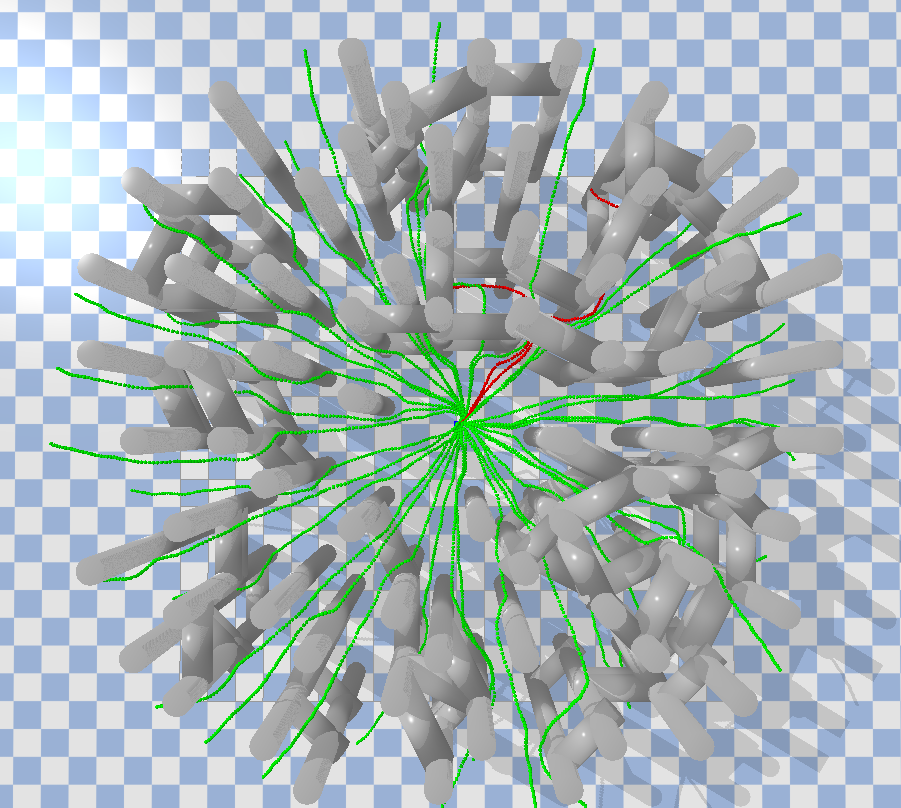

Experiments & Results

Key Insights:

• Depth-based DRL models (PPO and TD3) can learn obstacle avoidance policies effectively in simulated UAV environments.

• PPO is more stable during training, while TD3 can converge faster but is more sensitive to hyperparameters.

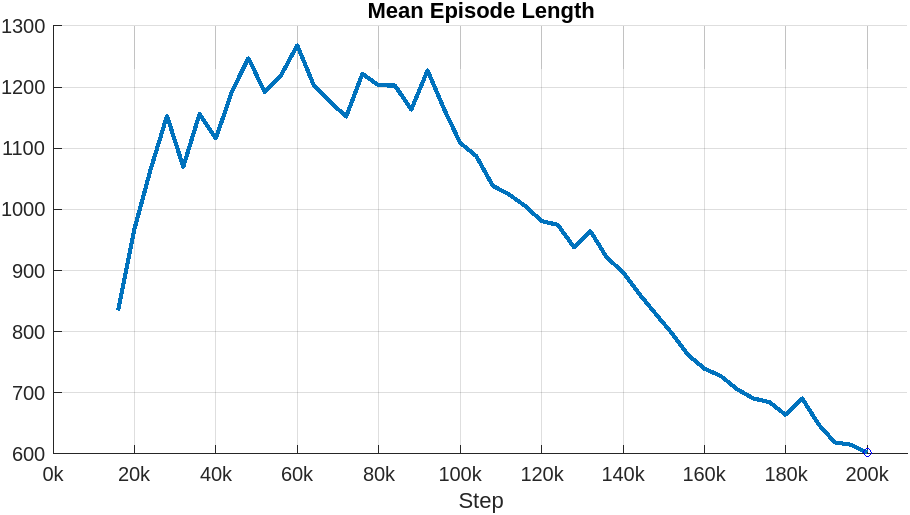

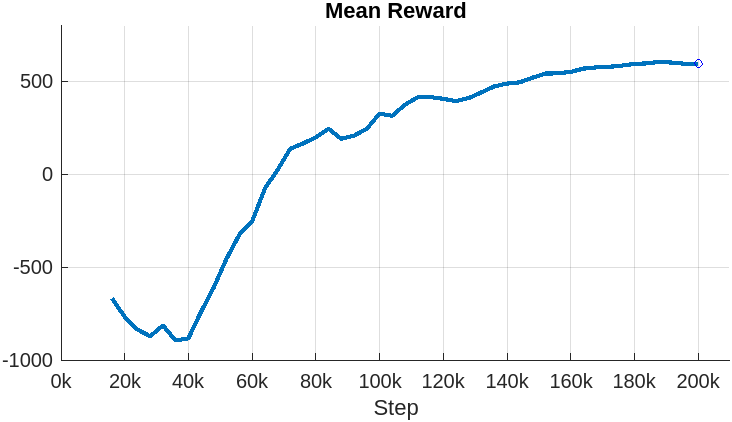

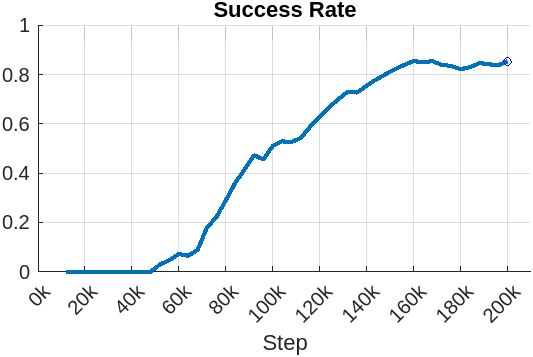

Preliminary Results:

• Achieved over 87% success rate in reaching target positions without collision in structured environments.

• Training reward trends show steady improvement after 2e5 timesteps with both algorithms.